Asimov's Three Laws

The idea of a “robot uprising”, in which hyper-intelligent robots overthrow their human makers, is so common in today’s media that it has become cliche. While it may seem overplayed, and by extension less likely to actually happen, it is rooted in a valid concern that has been around for as long as robots themselves. These concerns were most famously addressed with Isaac Asimov’s “Three Laws of Robotics”, which were meant to prevent any such issue and guided much of robot development in past decades.

Asimov’s Laws, which were first written down in one of his short stories, Runaround (from the collection titled I, Robot, which you may recognize from the dystopian robot uprising movie of the same name), and are as follows:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These laws were meant to keep robots in service to their human creators, and to prevent them from causing any harm to each other or to humans. Under the First Law, robots are expressly prevented from harming humans, either directly or indirectly. For example, a robot would be unable to push someone into harm’s way, but if they saw someone in danger they would also be obligated to rescue them. Under the Second Law, robots are explicitly bound to follow human commands; robots must do as they are told, as long as it does not involve hurting anyone. And finally, under the Third Law, robots have a responsibility to keep themselves “alive”, but only if they can do so while following the first two laws.

You may find yourself thinking that Asimov’s Laws don’t seem like enough to prevent robots from having a negative impact on the world - and you’d be exactly right. While they make sense in theory, there are two main issues with Asimov’s Laws that many roboticists struggle with in practice. First, there are many loopholes that a person, or a highly intelligent robot, could use to cheat the system. And second, humans and robots think about problems very differently - even if robots follow what they believe to be the laws, this might not always line up with a human interpretation.

Like everything else in the field of robotics, it is time for the laws regulating the actions of robots to evolve. This is a challenge many roboticists are already working hard to solve, and that if completed would create a better world for both humans and their robot counterparts.

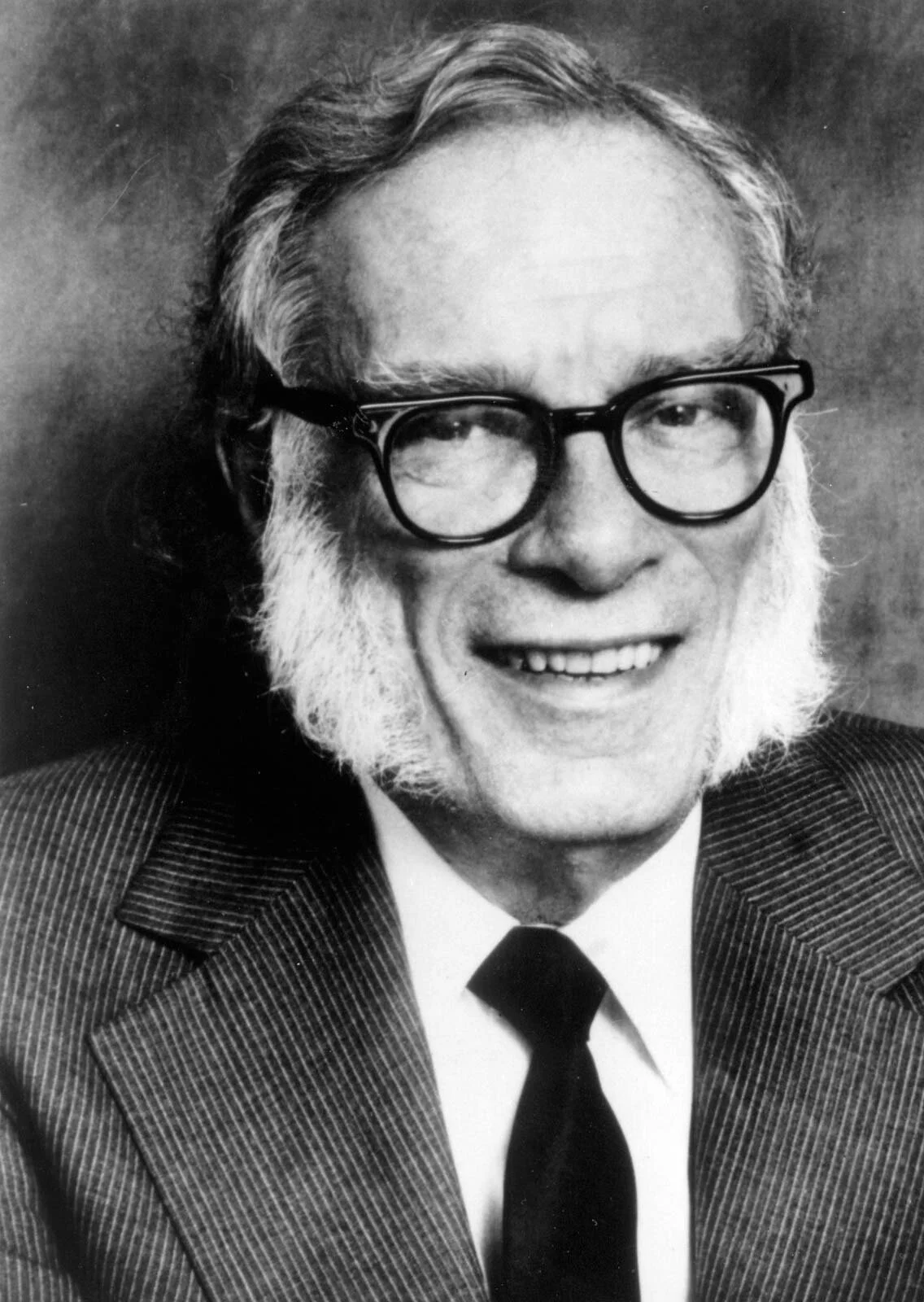

Isaac Asimov

Picture Source: britannica.com